Picture this: you wouldn't build a house without showing the blueprints to the family who will live there, right? That’s concept testing in a nutshell. It's the simple, powerful act of checking if your big idea actually resonates with your target audience before you pour time, money, and energy into building it.

It’s about swapping out internal guesswork for real-world feedback.

What Is Concept Testing in Simple Terms?

At its core, concept testing is a research method where you get a reaction from potential customers about a new product, service, or feature idea before you start development. Think of it as a dress rehearsal for your launch. You’re not building the whole thing; you're just presenting the core idea—the concept—to see if it clicks.

This simple step dramatically lowers the risk of innovation. It tells you if you're solving a real problem, if people understand what you're offering, and if anyone would actually want it. This isn't just a modern trend. Before concept testing became a standard practice, new product failure rates in some sectors were a staggering 95%. By the 1980s, its widespread use helped cut that number down to a more manageable 40-50% in major markets.

The Three Core Components of Concept Testing

To really get it, you just need to understand three moving parts. They’re simple, but they work together to give you the insights you need.

- The Concept: This is your idea, boiled down to its essence. It could be a simple written description, a rough sketch, a polished mockup, or even a quick video explaining what makes it special. The goal is to make it clear enough for someone to grasp its value without seeing a finished product.

- The Audience: These are the real people you hope will one day use your product. Getting this right is everything. You wouldn't ask a group of retired gardeners for feedback on a new SaaS tool for developers—the data would be useless.

- The Feedback: This is what you get back from your audience. It can be quantitative, like a rating scale for "how likely are you to buy this?" or qualitative, like open-ended comments on what they loved and hated. This feedback is gold; it’s what you'll use to improve, change direction, or even scrap the idea and move on.

Concept Testing At a Glance

Here’s a quick breakdown of these components and why they’re so important, especially when your team is working remotely.

| Component | What It Is | Why It Matters for Remote Teams |

|---|---|---|

| The Concept | The core idea presented in a simple, understandable format (e.g., mockup, description). | Creates a tangible focal point that everyone can see and discuss, regardless of their location. |

| The Audience | A representative sample of your intended users or customers. | Connects a geographically dispersed team directly to the real-world needs of their target market. |

| The Feedback | The qualitative and quantitative data collected from the audience about the concept. | Provides objective, customer-driven data that cuts through subjective opinions in virtual meetings. |

This simple loop—presenting an idea to the right people and listening to what they say—is the engine of smart product development.

Why It's Non-Negotiable for Remote Teams

For product teams that are spread out across different cities and time zones, concept testing isn't just a nice-to-have; it's a lifeline. It’s more than just a research method—it’s a powerful alignment tool.

When your team is distributed, it’s so easy for the vision to get fragmented or for assumptions to go unchecked. Misunderstandings can creep in over Slack and Zoom.

Concept testing provides a single source of truth—the voice of the customer—that unites everyone around a shared, validated goal. It ensures that every developer, designer, and marketer is building toward the same customer-centric outcome, preventing wasted effort and building collective confidence.

By grounding every debate and decision in actual user data instead of personal opinions, teams can move faster and with more conviction. This is especially critical once you've moved past the initial brainstorming and are starting to narrow down your focus. If you want to learn more about that early creative stage, you can explore our guide on the definition of ideation. Ultimately, this data-driven approach builds a culture of learning, which is exactly what you need to succeed.

Why and When Concept Testing Is Critical

So, you know what concept testing is. The bigger question is, why should your team bother? Think of it as a cheap insurance policy against building something nobody actually wants. It’s all about swapping out expensive guesswork for real-world data, right at the start of the development process.

Launching a new feature without testing the idea first is like driving blindfolded. You might have a brilliant idea bouncing around internally, but you’re essentially navigating a complex market based on gut feelings alone. That's a risky game, especially when studies show that fixing a problem after launch can cost four to five times more than catching it during the design phase. Concept testing is how you take off the blindfold.

It gives you a chance to see your idea through your customers' eyes. You get raw, honest feedback that broad market research can’t provide. Instead of just hoping you're on the right track, you get clear signals on what people love and what they don't, long before you sink serious time and money into development.

Knowing the Right Moments to Test

Concept testing isn’t a one-and-done deal. It’s a tool you pull out at specific, high-stakes moments in your project timeline. Knowing when to test is just as important as knowing how.

Here are the key moments concept testing can be a game-changer:

- Before Writing a Single Line of Code: This is the big one. Getting validation here means your engineering team’s valuable time is spent on an idea that already has a green light from customers. This massively boosts your odds of success and keeps team morale high.

- When Deciding Between Competing Ideas: Product teams are constantly weighing different paths forward. Instead of getting stuck in endless internal debates, let your customers break the tie. Concept testing shows you which solution truly solves their problem.

- Prior to a Major Marketing Campaign: Your messaging is a product in itself. Testing ad concepts, taglines, or value propositions makes sure your marketing dollars are actually spent on a message that resonates.

- When Exploring a New Market or Persona: Moving into a new demographic? Concept testing helps you figure out their unique needs and whether your solution makes sense in their world before you commit to a full-scale launch.

It’s about making sure your idea hits the mark with your audience, much like testing the waters before launch helps creators spot issues early. This early feedback loop can be a huge advantage, helping you build the right thing from day one. To learn more about that, see our guide on reducing time to market.

The Ultimate Alignment Tool for Remote Teams

For remote and distributed teams, concept testing does more than just validate ideas—it gets everyone on the same page. When your team is scattered across time zones, it's easy for assumptions to run wild and for communication to break down.

Concept testing provides objective, customer-driven data that anchors every conversation. It shifts discussions from "I think we should…" to "Our target users responded best to…" This removes ego from the equation and unites everyone around a shared, validated vision.

This shared understanding is powerful. Instead of wasting cycles in circular debates, your team can move forward with confidence, knowing their work is grounded in real customer feedback. Plus, this hard data is your best friend when you need to get buy-in from leadership—it’s tough to argue with solid evidence.

Choosing the Right Concept Testing Method

Once you're convinced that concept testing is a good idea, the next question is always, "Okay, but how do we actually do it?"

Picking the right method isn’t about finding one “perfect” approach. It’s about matching the tool to the job. You need to consider how far along your idea is, what resources you have, and exactly what you're trying to learn.

Think of it like choosing a camera. Sometimes a quick snapshot on your phone is all you need. Other times, for a really important shot, you need to break out the professional DSLR with all the lenses. A quick survey might be perfect for an early-stage idea, but a fully-fleshed-out concept probably needs a more in-depth prototype test.

Understanding the Main Approaches

There are a few tried-and-true methods for concept testing, and each has its own quirks—especially when you’re working with a remote team. Most of them boil down to showing users one or more concepts and gathering their reactions in a structured way.

Let's break down the main contenders.

Monadic Testing

This one’s simple. Each person you test with sees only one concept, all by itself. They judge it on its own merits without any other ideas clouding their judgment. This is your go-to for getting a pure, unbiased read on a single idea.

- Best For: When you need to go deep on a single, well-defined concept and really understand its strengths and weaknesses.

- Remote Benefit: It keeps things short and sweet. Shorter tests mean your remote participants stay focused and give you better feedback.

Sequential Monadic Testing

This is a slight variation. Participants see multiple concepts, but they see them one after the other, evaluating each one before moving on to the next. It lets you get detailed feedback on a few different ideas while also getting a feel for which one comes out on top.

- Best For: When you have a small handful of distinct ideas (say, three different feature concepts) and want to see how each one holds up.

- Remote Challenge: Be careful with this one. It can lead to long sessions and test-taker fatigue. If you go this route, keep the number of concepts to a minimum.

Comparative Testing

This method is a straight-up showdown. You present two or more concepts side-by-side and ask people to pick a winner. It's a head-to-head competition designed to give you a clear answer.

- Best For: Making a decision between two very similar options, like a couple of different landing page headlines or UI tweaks.

- Remote Benefit: It’s fast and decisive. You get a clear signal on what people prefer, which is perfect for cutting through internal debates.

The key is to pick the method that matches the decision you need to make. Monadic testing tells you if an idea is good on its own. Comparative testing tells you which idea is better than another.

Choosing Your Concept Testing Method

With these methods in mind, how do you choose? Every project is different, so remote teams need to weigh the pros and cons based on their specific situation. This table breaks down the key considerations to help you pick the right approach for your next test.

| Method | Best For | Key Benefit for Remote Teams | Potential Challenge |

|---|---|---|---|

| Monadic Testing | Getting deep, unbiased feedback on a single, well-defined concept. | Focused and short, which keeps remote participants engaged. | Can be more expensive and time-consuming if you have many concepts to test. |

| Sequential Monadic Testing | Evaluating a small set of distinct concepts (2-4) individually. | Efficient way to gather detailed feedback on multiple ideas in one session. | Risk of participant fatigue and order bias (the first concept seen might have an advantage). |

| Comparative Testing | Deciding between two or more similar variations of an idea. | Fast, decisive, and great for breaking internal deadlocks quickly. | Doesn't tell you if any of the options are actually good, just which one is preferred. |

Ultimately, there's no single "best" method. The best choice is the one that gets you the specific answers you need to move forward with confidence, without overwhelming your participants or your budget.

Key Metrics to Track in Your Tests

No matter which method you pick, you need to measure the right things. Good metrics are what turn subjective opinions into data you can act on. Your questions should always circle back to a few core themes to get a real sense of your concept's potential.

- Purchase Intent: This is the million-dollar question: "How likely would you be to buy or use this?" It’s a direct gut check on your idea's commercial viability.

- Appeal: This one gets at the emotional reaction. "How appealing do you find this concept?" It tells you if the idea actually sparks any interest or excitement.

- Uniqueness: This helps you figure out if you're just creating more noise. "How different is this from what's already out there?" A high score here is a great sign.

- Clarity: This is basic, but critical. "How easy or difficult is this concept to understand?" If people don't get it, they'll never buy it.

Tracking these metrics consistently over time helps you build a reliable system for spotting winning ideas. For teams that want to add more structure to their feedback sessions, the nominal group technique is also a great way to prioritize ideas with group input.

In the end, it’s all about finding smart ways to get customer feedback that actually tells you something useful.

Your Step-By-Step Guide to Remote Concept Testing

Alright, let's move from theory to action. Running a concept test, especially with a remote team, works best when you have a clear, repeatable playbook. A solid workflow keeps everyone on the same page and pulling in the same direction. Here are six straightforward steps your team can start using right away.

Step 1: Nail Down Your Core Concept

Before you ask for a single opinion, you have to be crystal clear on what you’re actually testing. A fuzzy idea guarantees fuzzy feedback. Your first job is to boil your concept down to its absolute essence.

This doesn't mean you need a polished, pixel-perfect design. Early on, simple is powerful. Your "concept artifact" could be:

- A quick one-paragraph pitch: In simple terms, what problem are you solving, who is it for, and what makes your solution different?

- A basic sketch or wireframe: Just enough to visualize the main idea. Tools like Figma or Miro are fantastic for this, letting your whole team jump in and collaborate.

- A short video or a few slides: Tell the story of your concept. This is a great way to walk someone through the idea, no matter where they are.

The goal here is to create something a real person can understand in less than a minute.

Step 2: Find Your People

This is where so many teams go wrong. You can't just ask anyone for feedback. Glowing reviews from people who would never actually use your product are worse than useless—they're a trap.

You need to know exactly who your ideal user is. Think beyond basic demographics and get into their heads. What are their daily habits? What really frustrates them? For remote teams, digital tools make it easier than ever to find these niche groups.

Getting sharp, honest feedback from five people who are a perfect fit for your product is infinitely more valuable than getting vague praise from 50 random participants.

User testing platforms, dedicated social media communities, or even your own customer email list can be goldmines for finding the right audience.

Step 3: Create Your Test Materials

Now that you know what you're testing and who you're testing it with, it's time to build the test itself. This usually means turning your concept into a survey, an interview guide, or a simple interactive prototype.

Keep it short and to the point. People participating remotely have a million distractions, so you have to respect their time. A great concept test focuses on just a few critical questions. Are you trying to gauge the overall appeal? See if the value proposition lands? Or find out if they’d actually pay for it? Pick one or two and stick to them.

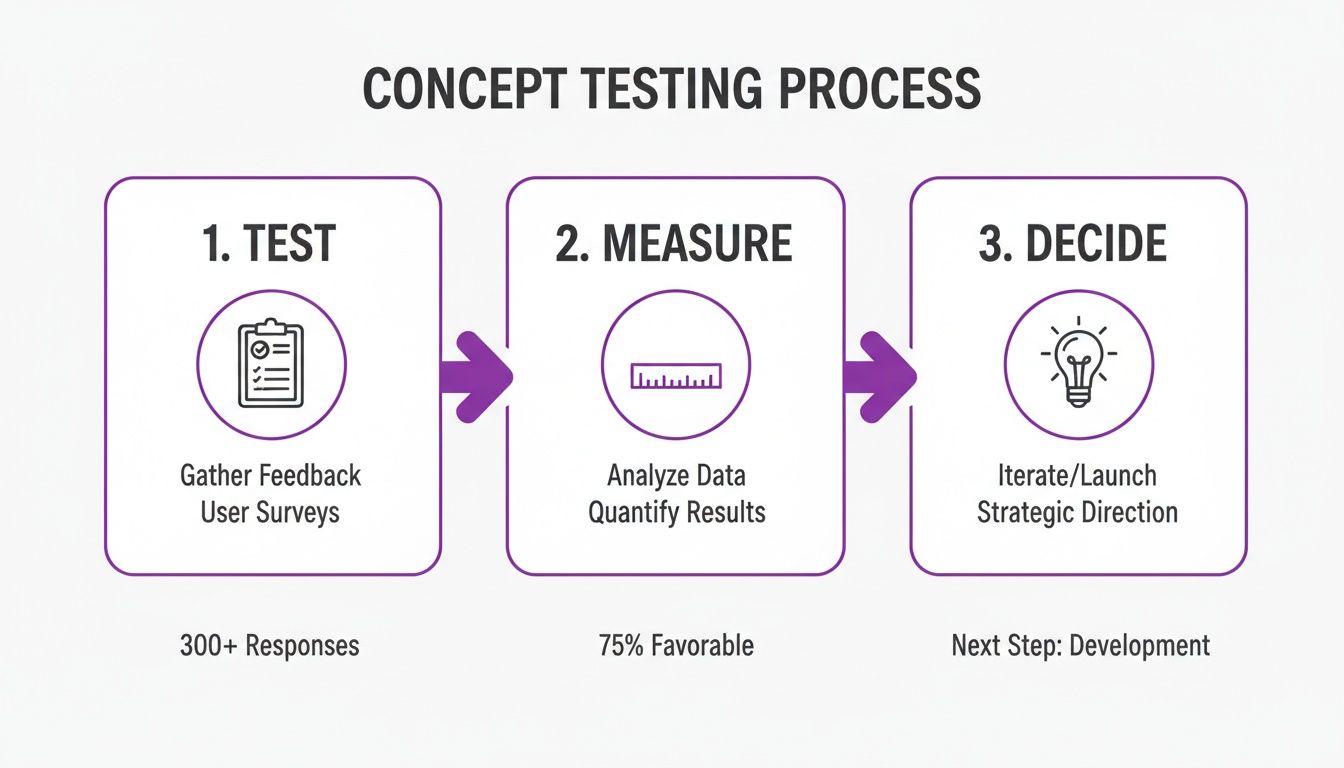

This simple loop—test, measure, decide—is the heart of the process. It's not about just collecting data; it's about using that data to make a smart, informed decision.

Step 4: Pick the Right Digital Tools

Your tech stack can make or break a remote concept test. The tools you choose need to make it easy for your team to work together and even easier for your participants to give you feedback.

Your remote toolkit will probably include:

- Survey Platforms: Tools for building and sending out questionnaires.

- User Research Platforms: Services that handle the heavy lifting of recruiting participants and running the tests.

- Video Conferencing Software: A must-have for live interviews and virtual focus groups.

- Collaboration Hubs: A central spot like Slack or your project management tool to share findings as they roll in.

Step 5: Run the Test Smoothly

With all your prep work done, it’s go-time. For a remote test, clear and constant communication is everything. Have one person be the point-of-contact to watch over the test, jump on any technical glitches, and keep the team in the loop.

And remember, you're not in the room with your participants, so your instructions have to be foolproof. Make sure the context and the tasks are explained so clearly that there’s no room for confusion. This is a vital part of many product discovery techniques that help teams avoid building the wrong thing.

Step 6: Turn Feedback into an Action Plan

This is the most important step. Raw data doesn't do you any good until you figure out what it means and what you’re going to do about it.

Get all your findings—survey numbers, interview notes, and any other observations—into one shared space. Then, book a virtual workshop for the whole team to dive in together.

Start by grouping the feedback into themes. What did people love? What totally confused them? What did they hate? From there, you can map out your next steps. The goal isn't a 50-page report; it’s a simple, clear action plan:

- Green Light: The concept works. We're good to move on to the next phase.

- Yellow Light: The core idea is solid, but some key things need fixing. Time to iterate and re-test.

- Red Light: This isn't landing with users. We need to pivot or head back to the drawing board.

Real-World Examples of Concept Testing Wins

It’s one thing to talk about frameworks and theories, but it’s another to see concept testing save the day in the real world. This process isn't just an academic exercise. It’s a pragmatic tool that has steered countless products away from failure and helped turn good ideas into truly great ones.

When you look at the stories behind iconic brands and scrappy startups, you see a common thread: they listened to their customers first. These examples show how a little early feedback can lead to massive wins down the road.

The Launch of a Beverage Giant

Let's go back to the early 1980s. The Coca-Cola Company had a big idea: a sugar-free cola that carried the flagship Coke name. But they didn't just cross their fingers and hope for the best. Instead, they ran extensive concept tests to see how people would react.

This was a huge gamble. Their existing diet drink, Tab, had a loyal following, and launching a new product could have easily cannibalized its sales or, even worse, damaged the pristine Coca-Cola brand.

The results from the concept tests were crystal clear. Consumers overwhelmingly preferred a diet drink they could associate with the familiar taste and trust of Coca-Cola. That data gave the company the green light it needed to move forward. The result? Diet Coke became a cultural icon and one of the most successful beverage launches in history.

Validating a B2B SaaS Pivot

Concept testing isn't just for consumer titans. Picture a modern B2B SaaS startup aiming to build a massive, all-in-one project management platform. The team was convinced that more features would mean more customers.

But before writing a single line of code, they wisely decided to test the concept with their target audience—small marketing agencies. They put together simple mockups and a one-pager outlining the platform’s sprawling feature set.

The feedback was a total wake-up call. Potential users felt overwhelmed. They didn't want another bloated, complex tool. What they really needed was a simple, lightning-fast way to handle one specific pain point: client approvals.

This crucial insight, discovered through just a handful of conversations, triggered a complete pivot. The team scrapped their grand vision and focused on building a minimalist client approval tool instead.

The new, laser-focused concept tested brilliantly. When it launched, it found a passionate user base almost immediately. This is a perfect example of how concept testing works hand-in-hand with building one of the best minimum viable product examples—it forces you to nail the core value proposition first.

From Skincare Concept to Market Leader

The beauty industry is another place where this process shines. A brand developing a new acne treatment tested its concept with young adults and found a strong 72% purchase intent before the product even existed.

The testing also uncovered that the messaging around natural ingredients was a huge selling point. Armed with this knowledge, the brand centered its entire marketing campaign on those elements. After launching in 2019, the product became the number one acne treatment on the market, hitting over $50 million in sales in just its first two years.

Common Mistakes and How to Avoid Them

Concept testing can feel like you have a crystal ball, but a few common missteps can easily muddy the waters, leading to wasted time and effort. Knowing what these pitfalls are is the first step toward getting feedback you can actually trust. Ironically, most of these mistakes start with good intentions but end up giving you skewed data that points your team in the completely wrong direction.

One of the biggest blunders is asking leading questions. It’s so easy to accidentally phrase a question in a way that nudges people toward the answer you’re hoping for. Think of something like, "Wouldn't this amazing new feature make your life so much easier?" You’re basically priming them to agree with you, which poisons the data.

Another classic mistake is testing with the wrong audience. It feels great to get glowing reviews, but if they're coming from people who would never actually buy your product, you're just collecting vanity metrics. It's a false sense of security that can set you up for a very painful reality at launch.

Ignoring Uncomfortable Feedback

Maybe the most dangerous mistake of all is hearing only what you want to hear. When a participant gives you a piece of critical feedback or seems confused, that isn't a failure—it's a gift. Dismissing that input because it doesn't fit your grand vision is a fast track to building something nobody wants.

Think of criticism as a valuable data point. The negative feedback often uncovers the most important opportunities for improvement, shining a light on blind spots your team might have missed completely.

Instead of getting defensive, dig deeper into the critique. Ask "why?" to get to the root of their concern. For remote teams, a centralized ‘insights hub’—like a shared doc or a Miro board—is a great way to log all feedback, good and bad. This makes sure everyone on the team is looking at the same raw, unfiltered data.

Best Practices for Remote Teams

To stay clear of these traps, your remote team can put a few simple best practices into play. It's all about fostering clarity and objectivity in your process.

- Pressure-Test Ideas Internally First: Before you even think about showing a concept to users, run it by people from different departments on your own team. This is a simple gut check that can help turn a half-baked idea into something solid and testable.

- Use Neutral Language: Keep your questions open-ended. Instead of asking if an idea is "good," try something like, "What are your first impressions of this?" or "How do you see this fitting into your daily routine?"

- Create Detailed User Personas: Don't just wing it when it comes to recruiting. Build out specific, actionable personas for your target audience and use them as a guide for finding participants. This ensures you’re talking to people whose opinions genuinely matter.

- Embrace the "Red Flags" Meeting: Set aside a specific meeting after a round of testing to only talk about the negative feedback and concerns. This forces everyone to confront the uncomfortable truths and, more importantly, figure out how to act on them.

Got Questions? We've Got Answers.

Diving into concept testing for the first time? It's normal to have a few questions pop up. Let's clear up some of the most common ones so you can move forward with confidence.

How Many People Should I Actually Talk To?

This is the classic "it depends" answer, but for a good reason. Your goal dictates the number.

If you're after the why—the rich, qualitative insights behind people's reactions—you can learn a surprising amount from just 5 to 8 participants per user group. You'll start hearing the same themes over and over again, which is exactly what you want.

But if you need hard numbers to back up a decision, you'll need to go bigger. For quantitative data that gives you statistical confidence, aim for a sample size of 100 people or more.

Isn't This Just Usability Testing?

Nope! It's a common point of confusion, but they serve very different purposes at different times.

Think of it like this:

- Concept Testing is about the idea. It asks, "Do people even want this? Does it solve a real problem for them?" You do this before you've invested heavily in building anything.

- Usability Testing is about the execution. It asks, "Now that we've built it, can people actually use it easily?" This happens once you have a prototype or a finished product.

Concept testing saves you from building the wrong thing; usability testing saves you from building the thing wrong.

What Are the Best Tools for a Remote Team?

Running these tests when your team is spread out is all about having the right digital toolkit. The good news is there are plenty of great options out there.

The key is to find platforms that let you create surveys or show off prototypes, and critically, give you access to a pool of real people to test with. Combining these features means you can go from an idea to actionable feedback in a matter of hours, not weeks, which is a game-changer for remote teams.

What's This Going to Cost Me?

The budget for concept testing can be as big or as small as you need it to be.

If you're just starting out, a simple DIY survey sent to your company's email list might only cost you your time. It’s a great way to get your feet wet.

On the flip side, a large-scale study using a specialized platform that recruits a very specific type of participant could run from a few hundred to several thousand dollars. But when you weigh that against the massive cost of developing a product nobody wants, it's a pretty smart investment.

Ready to stop guessing and start validating? Bulby provides a structured, AI-guided brainstorming platform that helps your remote team refine ideas into test-worthy concepts. Transform your creative process and build products your customers will love. Discover how Bulby can guide your next great idea.